Driven by an insatiable curiosity and a commitment to innovation, I leverage my expertise in data science and engineering to transform complex datasets into actionable insights, empowering teams to make data-driven decisions and shaping the future of analytics.

Microsoft Power BI / Microsoft Fabric

Implementing robust ETL workflows using Azure / SSIS

SQL for data manipulation and analysis

Software Engineer (Business Intelligence)

BSc (Hons) Information Technology Specialised in Data Science

Second Class Upper Division. CGPA : 3.58

Vice President(Education) of Data Science Student Community.

Dean List Nominee Year 1, Year 2 and Year 4 for academic excellency.

Pearson Edexcel International Advanced Level

Mathematics - A*

Chemistry - B

Physics - B

I enjoy solving complex problems and building efficient solutions with languages like Python, Java and SQL

Turning raw data into actionable insights is my forte, driving better decisions and strategies.

I thrive on connecting with audiences, sharing ideas with clarity, and inspiring action through engaging presentations.

Sharing knowledge through writing fuels my creativity and keeps me connected to the tech community.

An avid sports enthusiast, I thrive on teamwork, strategy, and staying active.

Constantly exploring new technologies, I stay ahead in the ever-evolving world of innovation.

I excel at breaking down complex challenges and finding innovative, practical solutions.

I enjoy guiding teams, fostering collaboration, and achieving shared goals.

Listening to music fuels my creativity and provides a balance between focus and relaxation.

Technologies: Microsoft Fabric, Dataflow Gen 2, Notebooks, Data Pipeline, Data Warehouse, Lakehouse

Extracted data from multiple sources using Copy Data activity and Dataflow Gen 2. Designed and implemented robust ETL pipelines in Microsoft Fabric to ingest, clean, and transform data into a Lakehouse and Warehouse. Performed incremental loading to ensure efficiency and accuracy. Built semantic models and developed interactive reports to provide real-time business insights, supporting data-driven decision-making.

Technologies: Data Modelling, Power BI, SQL Server, Data Warehouse

Developed interactive Power BI dashboards and apps to track sales KPIs, identify trends, and forecast revenue, enabling stakeholders to make data-driven decisions.

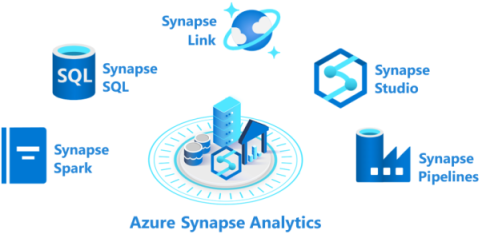

Technologies: Azure Synapse, SQL, Data Lake, Data Warehouse

Built and deployed Azure Synapse pipelines to process streaming data and load it into a data warehouse, optimizing ETL workflows and improving processing efficiency.

Technologies: SSIS, SSAS, SQL Server, Excel, Data Warehouse

Designed and automated the extraction, transformation, and loading (ETL) of financial data from multiple sources into staging and a data warehouse, ensuring data accuracy and significantly reducing manual effort. Implemented an SSAS Cube to create a multidimensional data model, enabling advanced analytical capabilities. Connected the SSAS Cube to Excel for streamlined data retrieval and reporting, providing users with intuitive and efficient access to insights.

Technologies: Python, Flask, REST APIs, Jenkins

Developed a web application for inventory management, featuring functionalities such as stock tracking, order management, and reporting. Designed and implemented REST APIs to enable seamless integration with other applications. Leveraged a Jenkins pipeline to automate the deployment of the application and APIs to a Kubernetes cluster, ensuring scalability and reliability for multi-user access.

Technologies: Java, Spring Boot, REST APIs, Postman

Designed and implemented a scalable REST API for an e-commerce platform to manage core functionalities, including user authentication, product management, and order processing. Utilized Postman for comprehensive API testing and validation. Created detailed API documentation to ensure seamless integration for developers and stakeholders.

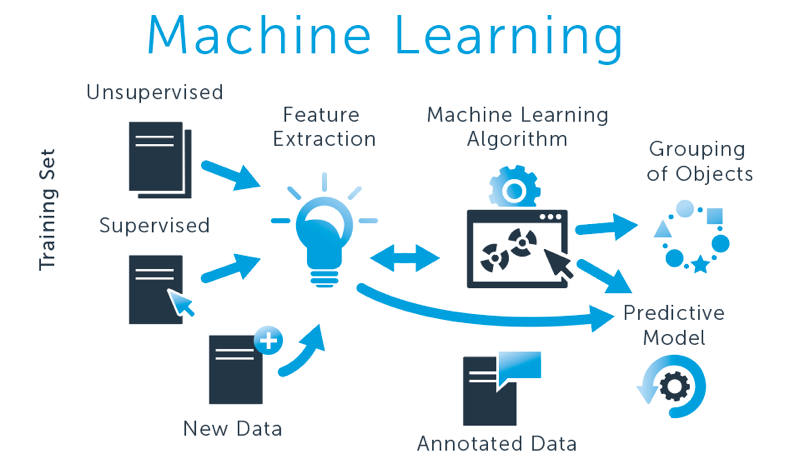

Technologies: Python, Scikit-learn, Pandas, Jupyter Notebook

Created a classification model to predict customers' next payment dates using historical transaction data, improving cash flow management.

GraphQL, a powerful query language for APIs, allows developers to query multiple data sources using a single API and retrieve exactly the data they need. This article will walkthrough setting up a GraphQL API in Microsoft Fabric, with a focus on the essentials of querying data and managing relationships effectively.

Read more

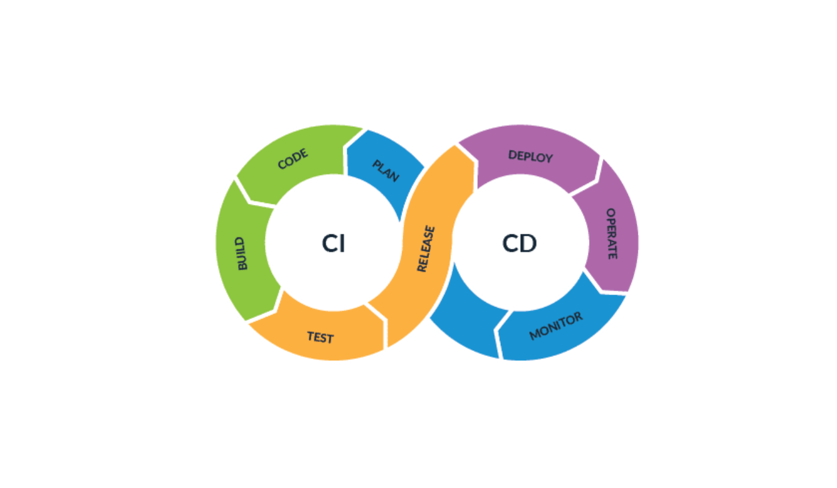

Having worked in the field of business intelligence, my primary focus is on transforming data into actionable insights. However, in today's fast-paced tech landscape, understanding the broader ecosystem, including deployment processes, is crucial.

This is where Continuous Integration and Continuous Deployment (CI/CD) come into play.

Even though my day-to-day work revolves around business intelligence, I believe it's essential to grasp the fundamentals of CI/CD to ensure that the applications and solutions we develop are delivered efficiently and reliably.

Read more

Power BI is all about making data work for you. In this article, we will explore an essential function 'CROSSJOIN' to unlock the full potential of your data. This function is a pathway to extracting valuable insights and facilitating data-driven decisions. Let's jump right in and explore.

Read more

A bar chart is a graphical representation of data. Bar charts can be helpful for comparing and displaying data, especially when the data is categorized. They are frequently used in the business, economics, and social sciences to display data. Apache Superset is a modern, enterprise-ready business intelligence web application.

Read moreThis highlights the importance of transforming raw data into a clean, structured format to improve the effectiveness of market basket analysis. Using a practical example, it demonstrates the process of preparing data for association rule mining, which uncovers valuable patterns and co-occurrences in transactional data.

Read more

Data Mining also known as Knowledge Discovery of Data (KDD) is all about discovering hidden information and knowledge which we consider useful , from the massive collection of data. It is a process of discovering trends and patterns in large datasets to showcase relevant insights of it. Data mining origins are based on Classical statistics, Artificial intelligence, and Machine learning.

Read more